Introduction

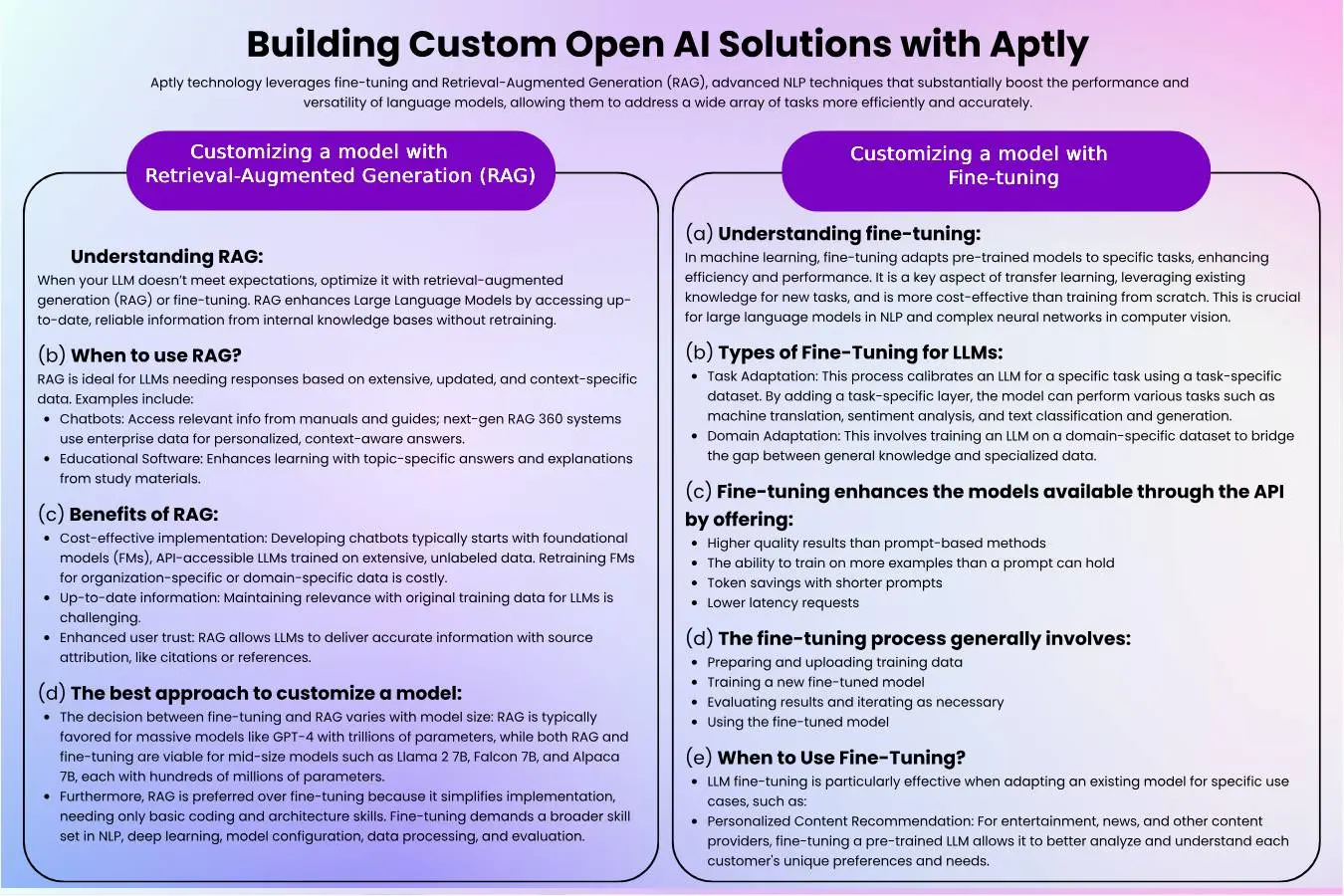

In the rapidly evolving field of artificial intelligence, businesses and developers are constantly seeking ways to leverage cutting-edge technologies to solve complex problems, streamline operations, and create innovative solutions. OpenAI, a leader in AI research and application, provides powerful models like GPT-4 that can be harnessed for a variety of applications. However, to truly unlock the potential of these models, a customized approach is often necessary. This is where Aptly comes into the picture.

Aptly Technology offers custom OpenAI solutions that enable the customization, deployment, and fine-tuning of OpenAI models using Azure’s robust cloud infrastructure. In this blog post, we will explore how to build, deploy, and fine-tune custom OpenAI solutions using Azure OpenAI.

Building Custom OpenAI Solutions with Aptly

Deploying Custom OpenAI Solutions

a) Aptly Technology supports following OpenAI’s LLM models for both retrieval-augmented generation (RAG) and fine-tuning tasks:

- GPT-4o

- GPT-4 Turbo

- GPT-4

- GPT-3.5 Turbo

- DALL·E

- TTS

- Whisper

- Embeddings

- Moderation

- GPT base

- Deprecated

b) Aptly Technology supports following OpenAI’s embedded models for both retrieval- augmented generation (RAG) and fine-tuning tasks:

- text-embedding-3-small

- text-embedding-3-large

- text-embedding-ada-002

c) Deploying Fine-tuned model:

Data Preparation:

-

Data Collection: Gather domain-specific datasets relevant to the task you want to fine-tune the model for. Ensure data quality and enough to achieve desired performance.

-

Preprocessing: Clean and preprocess data to remove noise, standardize formats, and prepare it for training.

Model Selection:

-

Choose a pre-trained language model (LLM) suitable for fine-tuning. Popular choices include GPT-3 or similar architectures from OpenAI.

-

Identify specific parameters and hyperparameters to adjust during fine-tuning based on the task requirements.

Fine-Tuning Process:

-

Initialize the pre-trained LLM with domain-specific data.

-

Train the model on the fine-tuning dataset, adjusting weights and parameters to optimize performance for the target task.

-

Evaluate the fine-tuned model using validation datasets to ensure it meets desired accuracy and performance metrics.

Integration and Deployment:

-

Package the fine-tuned model for deployment, ensuring compatibility with deployment platforms (e.g., cloud services, on-premises servers).

-

Implement APIs or SDKs for seamless integration into existing applications or workflows.

-

Conduct thorough testing to validate model behavior in production environments, ensuring it handles real-world data and queries effectively.

Monitoring and Maintenance:

-

Set up monitoring tools to track model performance post-deployment.

-

Implement regular updates and maintenance to fine-tuned models to adapt to evolving data and user needs.

-

Provide ongoing support to address any issues and optimize model performance over time.

d) Deploying a RAG model:

- Knowledge Base Setup:

- Establish and curate external knowledge bases (e.g., databases, documents) containing relevant information for retrieval.

- Ensure these knowledge bases are accessible and updated regularly to maintain accuracy.

- Model Integration:

- Integrate a pre-trained LLM with RAG capabilities into the deployment environment.

- Configure the model to interact with external knowledge bases efficiently during the generation process.

- Query Handling:

- Develop mechanisms for handling user queries that involve retrieving and integrating external information.

- Implement algorithms or strategies to prioritize and retrieve the most relevant data from knowledge bases based on user input.

- Testing and Validation:

- Test the RAG model thoroughly to ensure accurate retrieval and generation of responses.

- Validate the model’s ability to handle varying query types and maintain coherence and relevance in responses.

- Deployment and Optimization:

- Deploy the RAG-enabled model in production environments, ensuring scalability and reliability.

- Optimize performance by fine-tuning retrieval algorithms or updating knowledge bases as needed.

6. Monitoring and Support:

- Monitor the RAG model’s performance continuously to detect and address any issues promptly.

- Provide user support and training to ensure efficient utilization of RAG-powered applications.

Building Custom AI Models with Aptly Using Azure OpenAI

Detailed Workflow for Fine-Tuning in Azure OpenAI Studio

Prepare Your Training and Validation Data:

- Format: JSON Lines (JSONL) files encoded in UTF-8 with a byte-order mark (BOM).

- Size: Each file must be less than 512 MB.

- Content: Ensure the data includes high-quality input and output examples as they significantly influence the model’s performance.

Using the Create Custom Model Wizard:

- Open Azure OpenAI Studio at Azure OpenAI Studio.

- Sign in with your Azure credentials and select the appropriate directory, subscription, and resource.

- Navigate to Management > Models and select Create a Custom Model.

Steps to Create a Custom Model:

Select a Base Model:

- Choose from available models (e.g., babbage-002, davinci-002, gpt-35-turbo versions, gpt-4).

- The choice of base model impacts both the performance and cost.

Upload Training Data:

- Choose to upload new data or use existing datasets.

-

For large files, prefer uploading via Azure Blob Storage for stability.

Upload Validation Data:

- Optionally, upload a separate validation dataset.

- Helps in monitoring and enhancing the model during training.

Configure Advanced Options:

-

Set parameters like batch size, learning rate, epochs, and seed for reproducibility.

Review and Train Your Model:

- Review your settings in the wizard and initiate the training job.

- Track the status of the job in the Models Pane under the Customized models tab.

Deploy and Use Your Custom Model:

- Once training is complete, deploy the model from the Models Pane.

- Optionally, analyze the model’s performance to ensure it meets your requirements.

Monitor Model Status and Performance:

- Checkpoints: Generated after each training epoch, these provide snapshots of the model to avoid overfitting.

- Evaluation: Ensures the model does not generate harmful content.

- Safety: Especially for GPT-4, additional safety evaluations are conducted.

Training and Validation Data Requirements

- Format: JSONL

- Encoding: UTF-8 with BOM

- Size Limit: Less than 512 MB per file

- Quality: High-quality examples are crucial for optimal model performance.

Data Upload Methods

- Local File Upload: Drag and drop or browse to select the file.

- Azure Blob Storage: Provide the file name and location URL.

Advanced Configuration Options

- Batch Size: Number of examples per training pass.

- Learning Rate Multiplier: Adjusts training learning rate.

- Epochs: Number of full cycles through the dataset.

- Seed: Ensures reproducibility of training.

Safety and Evaluation

- Data Evaluation: Pre-training check for harmful content.

- Model Evaluation: Post-training check for harmful responses.

By following these steps, you can effectively fine-tune a model using Azure OpenAI, ensuring it meets your specific needs while maintaining performance and safety standards.

Deploy and Use Your Fine-Tuned Model

Deploy the Model:

- After a successful fine-tuning job, deploy the custom model from the Models pane to make it available for use.

- Only one deployment is allowed per custom model. Re-deployment is necessary if inactive for over 15 days.

Monitor Deployment:

- Track deployment progress in the Deployments pane of Azure OpenAI Studio.

- For cross-region deployments, ensure the new region supports fine-tuning and that authorization tokens cover both source and destination subscriptions.

Use the Deployed Model:

- Use the model like any other deployed model in Azure OpenAI Studio’s Playgrounds.

- For babbage-002 and davinci-002, use the Completions playground and API.

- For gpt-35-turbo-0613, use the Chat playground and API.

Analyze the Model:

- Azure OpenAI attaches a results.csv file to each fine-tuning job for performance analysis.

- This file includes metrics like training loss, validation loss, and token accuracy.

- View these metrics as plots in Azure OpenAI Studio for performance insights

Clean Up:

1) Delete Deployments:

- Inactive deployments are automatically deleted after 15 days.

- Manually delete deployments from the Deployments pane.

2) Delete Custom Models:

- Delete models from the Models pane after removing their deployments.

3) Delete Training Files:

- Optionally, delete training and validation files from the Data files pane.

4) Continuous Fine-Tuning:

- Refine your model iteratively by using an already fine-tuned model as the base for further training.

By following these steps, you can efficiently manage and utilize fine-tuned models in Azure OpenAI, ensuring optimal performance and cost management.

Conclusion

Aptly leverages both fine-tuning and RAG system for custom OpenAI solutions, offering powerful capabilities tailored to specific business needs. By utilizing Aptly’s user-friendly interface and the robust infrastructure of Azure OpenAI Service, developers can create, deploy, and fine-tune AI models that deliver significant value. Whether you’re looking to automate tasks, enhance customer experiences, or create innovative products, Aptly provides the tools and support you need to succeed in the ever-evolving world of artificial intelligence.