Introduction

The evolution of Artificial Intelligence (AI) has necessitated powerful hardware solutions, with Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs) being at the forefront. In the ongoing TPU vs GPU conversation, GPUs—initially designed for graphics rendering—have evolved into versatile processors thanks to their parallel processing capabilities, making them highly effective for a wide range of AI tasks.

Notably, Nvidia was the first to offer a fully integrated GPU for PCs, marking a significant milestone in this domain. In contrast, TPUs, developed by Google, are purpose-built for AI computations, delivering optimized performance for machine learning workloads through their application-specific design.

But which one is the best for optimizing AI Infrastructure? What is their impact on AI?

To gain detailed insights into these questions, let’s dive into this article. This blog provides an in-depth comparison of TPU vs GPU, with an emphasis on technical aspects such as performance, scalability, and pros & cons. The article also highlights how these technologies have significantly propelled the progress of AI.

Understanding GPU’s and TPU’s In the Context of AI

What is GPU?

A Graphics Processing Unit (GPU) is a high-speed circuit designed for parallel processing of mathematical operations, ideal for graphics rendering and tasks like machine learning and blockchain. Since the late 1990s, GPUs have evolved from simple image display tools to powerful computing devices with Nvidia’s CUDA in 2007 enabling general-purpose parallel computing.

Known as the “gold” of artificial intelligence, GPUs are foundational for generative AI due to three key factors:

- Parallel Processing: GPUs handle multiple parts of a task simultaneously.

- Scalability: They can scale to supercomputing levels.

- Software Stack: Their extensive software stack enhances AI capabilities.

These features allow GPUs to perform calculations faster and more efficiently than CPUs, excelling in AI training and inference. According to Stanford’s Human-Centered AI group, GPU performance has increased by 7,000 times since 2003, with price per performance improving 5,600 times.

GPUs have dedicated RAM for handling large data volumes, and they process instructions from the CPU rapidly to render images on the screen.

What is TPU?

Google’s Tensor Processing Units (TPUs) are custom-built AI accelerators, specifically designed for neural networks and large AI models. These application-specific integrated circuits (ASICs) are ideal for a variety of applications, including chatbots, code generation, content creation, synthetic speech, and vision services.

TPUs enhance the performance of linear algebra computations, which are crucial in machine learning, and significantly reduce the training time for complex neural networks. In summary, TPUs represent a significant advancement in accelerating AI training and inference.

Key Features:

- The heart of TPUs, Tensor Cores are designed for tensor operations, excelling in matrix multiplications and mixed-precision calculations to deliver high computational throughput.

- TPUs can also be interconnected in clusters called TPU pods, which offer substantial computational power with high-speed interconnects. This interconnectivity is crucial for scaling deep learning tasks and accommodating the growing computational demands of modern AI research and applications.

- TPUs handle massive AI computational demands with high throughput and low latency, making them ideal for rapid training and inference of complex models. They utilize extensive parallelism to process multiple computations simultaneously, essential for training deep learning models with vast data and numerous parameters.

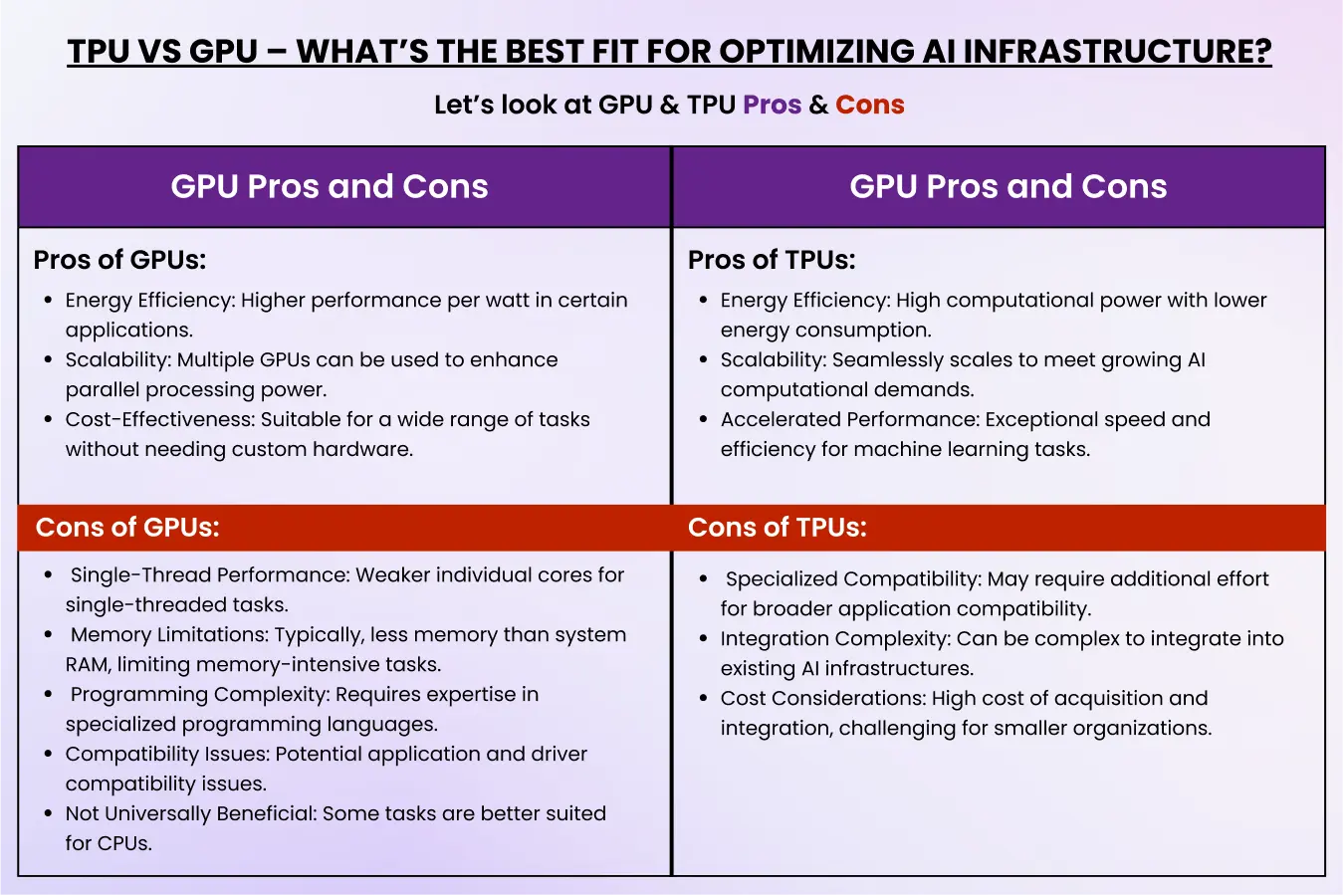

Pros and Cons of TPU & GPUs: Evaluating Their Role in AI Infrastructure

Overall Comparisons of TPU vs GPU

|

|---|

Optimizing AI Workloads with TPU vs GPU

– TPUs and GPUs are optimized uniquely to handle the diverse demands of AI workloads with efficiency in mind. TPUs excel in neural network operations by focusing on tensor computations, which are fundamental to tasks like deep learning. This specialization allows TPUs to minimize energy consumption by employing custom memory hierarchies that reduce latency and energy overhead during data processing. Techniques such as quantization and sparsity further optimize arithmetic operations, ensuring high performance while conserving power.

– In contrast, GPUs leverage their parallel processing capabilities across multiple cores to maximize throughput for various AI tasks. They employ features like power gating and dynamic voltage scaling to adjust energy consumption based on workload demands, ensuring efficient operation during intensive computational processes. Additionally, GPUs optimize memory access patterns and utilize software techniques like kernel fusion and loop unrolling to enhance energy efficiency during data transfers and calculations.

- These distinct optimizations enable both TPUs and GPUs to deliver robust performance in AI workloads while effectively managing energy resources, catering to the specific requirements of different types of artificial intelligence applications.

Applications

The field of AI hardware is diverse, offering GPUs and TPUs, each specialized in unique domains. Knowing where each excels can aid in choosing the right processor for specific AI tasks, whether it’s for graphics rendering or training extensive language models.

GPU Applications:

- Graphics Rendering and Gaming: GPUs are exceptionally good at creating realistic visuals in video games and processing visual data for applications like virtual reality and computer-aided design. They’re designed to handle complex graphics calculations quickly and efficiently.

- General-Purpose Computing: Beyond gaming, GPUs are widely used in scientific research and industries that require heavy computational power. They excel at speeding up simulations and data analysis tasks by breaking down complex calculations into smaller parts that can be processed simultaneously.

- Deep Learning and AI Training: GPUs play a crucial role in training artificial intelligence models, especially deep learning algorithms used in tasks like image and speech recognition. Their ability to process large amounts of data in parallel makes them ideal for training complex neural networks.

- Edge Computing: In edge devices such as smartphones, drones, and IoT devices, GPUs enable AI applications to run directly on the device. This capability is essential for applications that require real-time decision-making without relying on cloud computing.

TPU Applications:

- Deep Learning Model Training: TPUs are specialized processors designed specifically for training deep learning models. They excel at performing large-scale matrix calculations quickly, which are essential for training complex neural networks used in AI applications.

- Large Language Models: TPUs are particularly effective for handling the massive computational demands of large language models used in natural language processing tasks. These models require significant processing power to analyse and generate human-like text, which TPUs can provide efficiently.

- Cloud Computing: Integrated into Google Cloud Platform, TPUs offer scalable solutions for businesses and researchers working on AI projects. They provide high computational power for processing large datasets and running AI algorithms without the need for organizations to invest in and maintain their own hardware infrastructure.

Practical Use-Cases of GPU and TPUs

Here are some use cases showing how TPUs and GPUs are making a significant impact in the AI industry:

- OpenAI harnesses the computational might of GPUs to train its groundbreaking AI models, such as GPT-3. This model, with its 175 billion parameters, was trained using GPUs on massive datasets, pushing the boundaries of natural language processing and AI understanding.

- Waymo, in its pursuit of developing self-driving cars, utilizes TPUs in its data centers for training algorithms that process enormous volumes of sensor data. These algorithms need to make split-second decisions in real-time, and TPUs enable Waymo to accelerate the training process and improve the safety and efficiency of autonomous driving technology.

- NVIDIA, a leader in GPU technology, not only uses GPUs for AI research and algorithm development but also integrates them into products like autonomous vehicle platforms and AI-powered analytics tools. This integration helps NVIDIA advance the capabilities of AI across industries, from transportation to healthcare and beyond.

- Microsoft leverages GPUs to enhance its suite of products with AI capabilities, integrating advanced analytics and machine learning into services like Azure cloud and productivity tools. This integration enables Microsoft to deliver innovative solutions that improve productivity and decision-making for businesses worldwide.

These examples highlight how TPUs and GPUs are pivotal in driving AI innovation, enabling companies to tackle complex challenges and deliver transformative experiences across diverse applications.

Conclusion

In conclusion, the choice between TPU vs GPU for AI infrastructure depends on the specific needs of your application. GPUs excel in versatility and widespread support across various AI tasks, leveraging their robust hardware acceleration capabilities. On the other hand, TPUs offer specialized performance advantages for specific AI computations through application-specific integrated circuits, making them ideal for tasks demanding high throughput and efficiency. Ultimately, the decision hinges on balancing computational requirements with the intricacies of the AI workload to optimize performance and cost-effectiveness in deploying artificial intelligence solutions.

If you are looking to make your infrastructure AI-ready, contact our expert AI team. Aptly leverages advanced GPUs and TPUs to optimize AI workloads and build AI-ready infrastructure across on-premises, cloud, and data centers.